Detecting timing leaks in software, the easy way

What are timing attacks? How to write code free of timing leak vulnerabilities? How to use the verification tools dudect and TIMECOP? Some answers in this post, a joint work by JP Aumasson (Taurus CSO) and Tamine Kaourintin (Taurus summer intern).

Image credit: JP Aumasson, AI-generated with DALL·E.

1 :: From timing leaks to timing attacks

Timing attacks are a class of side-channel attacks that exploit timing leaks in computer programs in order to recover sensitive values (such as secret cryptographic keys). Unlike side-channel techniques such as electromagnetic and acoustic analysis, timing attacks do not necessarily require physical access to the target system. They are relevant not only to embedded systems, but to server applications as well.

A timing leak exists in a program when its execution time depends on the value of a secret. When this happens, the execution time inevitably reveals some information on the said secret. This may allows an attacker to recover the secret by measuring the execution/response time of the program/server and carrying out some additional calculation.

Exploiting a timing leak usually involves multiple steps, including:

- Prepare the attack: assessing the observable timing discrepancy, finding how to compute the secret from observables, estimating the number of samples required, and so on.

- Run measurements of the target system; this may involve non-trivial techniques to 1) set the system in a state where it'll do the targetted operation, and 2) isolate the part to measure from other operations.

- Analyze the results, using signal processing and mathematical tools, in order to calculate secret value. This step may involve some "brute force" search, which must be practically feasible.

1.1 :: Timing leak example

A simplistic example is the following, where SecretBit is a secret value that can be 1 or 0:

if SecretBit == 1:

DoSomethingSlow()

else if SecretBit == 0:

DoSomethingFast()Let's say this function is used by a remote system for a series of secret bits, and is computed for a single bit after a client sends some request whose response depends on the result of the computation.

The preparation step is simple: from the code, it is clear that if SecretBit is one (the ==1 case), this program will run a slow function, and if it is zero (the ==0 case) it will run a fast function.

The measurement step would then consist in sending a series of requests to the server (where each request would run the program for a distinct, random SecretBit values), and measuring their execution times.

The analysis would then consist in identifying the requests that were much faster to process than others; these "fast" cases are the ones where SecretBit is zero.

This contrived example illustrates why branchings depending on secret values are undesirable, if you want to avoid timing attacks. Cryptographic timing leaks are not necessarily more complex than that, but can also be quite sophisticated.

1.2 :: A brief history of timing attacks

The following public works are examples of attacks exploiting timing leaks, from the simplest to the most intricate, from the oldest to the most recent:

- The seminal 1996 paper Timing Attacks on Implementations of Diffie-Hellman, RSA, DSS, and Other Systems. This showed how simple implementations of integer exponentiation, as used in RSA and other public-key cryptosystems, could be broken by measuring execution times and treating the attack as a signal detection problem. This is essentially the cryptographic counterpart of our basic example above.

- The 2003 paper Remote Timing Attacks Are Practical, and its 2011 sequel Remote Timing Attacks Are Still Practical. These works showed how timing leaks in a remote server could be exploited, and identified defects in popular cryptography software.

- The 2005 paper Cache-timing attacks on AES, which showed that fast implementations based on look-up tables could be broken, owing to the variable memory look-up time depending on which part of the cache an element is stored.

- The 2009 post Timing attack in Google Keyczar library showed how an attacker could guess HMAC values — that is, authentication tags — by exploiting the fact that a byte comparison

self.Sign(msg) == sig_bytestakes more or less time depending on how many initial bytes are valid. - The 2018 Spectre microarchitectural attack family, which exploits speculative execution side effects, notably via timing discrepancies.

- The 2020 LadderLeak: Breaking ECDSA With Less Than One Bit Of Nonce Leakage. This showed that timing leaks of less than one bit of information on an ECDSA secret nonce can be enough to recover an ECDSA private key.

- The 2022 Hertzbleed: Turning Power Side-Channel Attacks Into Remote Timing Attacks on x86 paper. This showed how microprocessors’ context-dependent dynamic scaling (or “variable speed”), a performance optimization feature, can be exploited by adversaries.

These results show that timing leaks can be exploited at all layers of the software stack, from the microprocessor and its microcode to user applications written in a high-level language. Depending on the context, timing attacks may also be exploitable remotely, via REST API calls or other interfaces.

Timing attacks can indeed target embedded platforms, servers, and personal workstations alike, be these virtualized or bare-metal. They can leverage software idiosyncracies as well as properties of hardware components, and may be exploited in various creative ways on embedded platforms. In this post, however, we will restrict ourselves to pure software timing leaks.

2 :: The problem: producing constant-time code

If a piece of software can't leak information through timing, then it is unlikely to be vulnerable to timing attacks. The goal of so-called constant-time time is to produce such software.

However, the term “constant-time” can be a misnomer: you can't expect a program that encrypts arbitrary files to run in constant time, as in always the same time; encrypting a 10-megabyte file will take longer than encrypting a 10-byte one. What you want, instead, is that the execution time, as counted in CPU cycles (but sometimes in wall time, cf. Hertzbleed), be independent of the value of the secret data (secret keys, plaintexts, internal randomness, and so on).

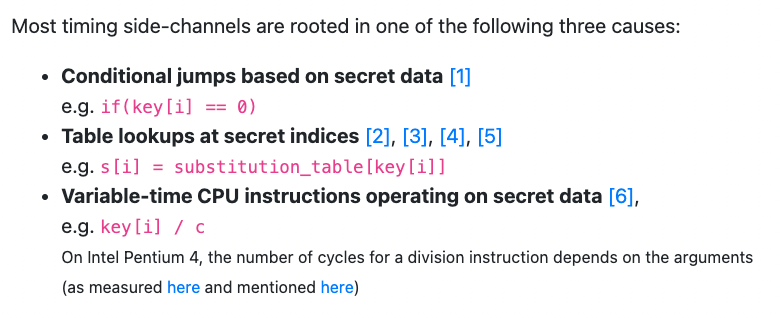

As the page of the TIMECOP utility explains, the cause of timing leaks is seemingly simple:

Therefore, conditional branchings must be eliminated when secret data is involved. For example, OpenSSL uses constant-time comparators when needed, using functions such as constant_time_eq() below, which calls constant_time_is_zero(), and avoids if conditions or other non-constant-time operations. This example for unsigned int types, but similar functions are available for other types:

static ossl_inline unsigned int constant_time_is_zero(unsigned int a)

{

return constant_time_msb(~a & (a - 1));

}

static ossl_inline unsigned int constant_time_eq(unsigned int a,

unsigned int b)

{

return constant_time_is_zero(a ^ b);

}Constant-time programming is hard though, especially when the goal is to produce constant-time software across a multitude of hardware platforms, operating systems, and compiler toolchains. As the BearSSL author noted, “Constant-time development thus requires heavy mental gymnastics to try to predict what various present and future compilers will do to the code, fueled by a maniacal knowledge of the darkest details for the C standard.”

One reason why constant-time programming is hard is that constant-time-looking source code does not necessarily lead to constant-time software, after compilation of the code, owing for example to :

- The compiler’s translation of source code into binary, where performance optimization may introduce timing leaks, and where timing leaks may only occur for certain platforms. See for example this case of a Curve25519 implementation only found non-constant-time when compiled for 32-bit architectures using MSVC 2015.

- CPU instructions that have different latency and/or throughput depending on the operands type and value. For example, the table below from BearSSL’s documentation shows which multiplications are and are not constant-time for various ARM architectures:

If some logic cannot be made constant-time, one may try to hide the information by introducing some jitter, as random delay, or by introducing “dummy” operations. Such tricks may diminish the signal, and may or may not be sufficient to thwart realistic attacks—as opposed to “lab” attacks.

To “validate” that a program has no blatant timing leak, and to detect leaks in other programs, techniques used include:

- Manual review of the source code

- Automated testing, using dedicated tools

The next section covers the latter point.

3 :: Tools for detecting timing leaks

There are tools to detect timing leaks in software, many which are listed in this post, but a number of which are not public, or outdated, or unusable. The usage and practical value of such tools was studied in this research paper.

Testing for timing leaks usually involves two steps:

- Telling the tool what are the secret variable(s) whose value should not influence the program's execution time. This is usually straightforward.

- Running the actual test. This is of course the hard part.

As with other types of security testing, timing leak testing can be either:

- Static, by analysis the code (source or binary) using formal or symbolic methods , but without executing the code. For example, Binsec/Rel uses symbolic execution from the binary, while AWS' SideTrail works at the source code and LLVM intermediate representation level. Each approach has its pros and cons, and best suitable for different use cases.

- Dynamic, by analysing the binary code as it's running, looking for non-constant-time instructions or patterns, or just measuring the execution time — which sounds naive and limited, but often does the job. We'll see examples of these two approaches in the rest of this post, with TIMECOP and dudect, respectively.

3.1 :: dudect, measure execution time and apply statistics

Presented in the 2017 paper dude, is my code constant time?, and available on GitHub, dudect is a small tool (around 350 lines of code) that simply measures execution times with respect to different values of the target secret, and then attempts to distinguish the empirical distributions. It's just basic statistics, inspired by hardware side-channel analysis, but works surprisingly well even with minute timing discrepancies.

dudect is written in C and directly works for C and C++ code. Programs in other languages can be tested by writing simple C wrappers. No change to the tested code is needed, but a simple ancillary program must be written to describe to dudect the interface to test and the input argument that is secret.

After running a test, dudect will return one of three guesses based on a statistical indicator's value, as described in the code:

if (max_t > t_threshold_bananas) {

printf(" Definitely not constant time.\n");

return DUDECT_LEAKAGE_FOUND;

}

if (max_t > t_threshold_moderate) {

printf(" Probably not constant time.\n");

return DUDECT_LEAKAGE_FOUND;

}

if (max_t < t_threshold_moderate) {

printf(" For the moment, maybe constant time.\n");

}As the paper concludes:

Our solution is conceptually simple, scales well and has modest speed/memory requirements.

Other pros of dudect include:

- Usability: it's straightforward to integrate (a single dudect.h file) and little additional development is needed.

- Efficiency: dudect succeeds at detecting small leaks that it's expected to detect, as per mathematical bounds on distinguishing distributions vs. number of sample.

- Will detect timing leaks caused by variable-time CPU instructions.

Cons include:

- Slow: many iterations are needed to detect leaks.

- dudect can miss minute leaks if an insufficient number of samples is used.

- The source of the leak is not identified, as dudect works in a black-box way and returns a guess about constant-timeness.

3.2 :: TIMECOP, build atop Valgrind

The venerable Valgrind tool suite is mostly known for detecting memory leaks, but is in fact a general instrumentation framework for building dynamic analysis tools. The idea to leverage Valgrind for detecting timing leaks is due to Langley, with his 2010 ctgrind patch, which used the following trick:

Valgrind includes a memory error detector tool called Memcheck, which will notably report occurrences of uninitialised data being used in branching conditions or to index a memory access. These are precisely the causes of a large class of timing leaks, so Langley's trick was to flag secret values as uninitialized – via the ct_poison() function – and let Memcheck do its job and report any case of timing leak.

TIMECOP is essentially a repackaging of Langley's approach, applied to cryptographic algorithms in the SUPERCOP benchmarking suite, and publishing its results.

TIMECOP/Memcheck's pros include:

- Usability: As dudect, TIMECOP is straightforward to use.

- Speed: Unlike dudect, a single run of the program is enough.

- The source of the leak will be identified and reported (unlike with dudect).

Cons include:

- "Valgrind cannot spot cases where variable-time code is caused by variable-time CPU instructions." (citing the TIMECOP page)

- "Some implementations mix public and secret data for various reasons. For example, in some implementations, the secret key also contains a copy of the public key. This can cause TIMECOP to flag an implementation as variable-time, even though the timing variances depend on public data." (citing the TIMECOP page).

- The results are less human-readable than dudect's, and useful information can be buried among many non-useful warnings and errors.

4 :: Usage and results on a toy example

To showcase the usage and effectiveness of dudect and TIMECOP, we'll use as an example the tiny_sha3 C implementation of SHA3/Keccak by Markku-Juhani O. Saarinen. We choose this example for its relevant (cryptographic primitive), the simplicity and succinctness of its code, and the assurance that it is constant-time, unless we modify it.

To test the detection of a timing leak, we create the variant "Alt-SHA3" by introducing 3 lines code in sha3.c, after the computation of the permutation in sha3_keccakf():

// Iota

st[0] ^= keccakf_rndc[r];

// TIMING LEAK

if (st[0] > st[1]) {

st[0] ^= st[1];

}

// END TIMING LEAK

}

#if __BYTE_ORDER__ != __ORDER_LITTLE_ENDIAN__

// endianess conversion. this is redundant on little-endian targets

for (i = 0; i < 25; i++) {

v = (uint8_t *) &st[i];

t = st[i];

We will then mark the input to the hash function as secret, and since the internal state st depends on the input, it is "contaminated" and thus seen as secret as well. The condition st[0] > st[1] is thus secret as well, whereas we expect it to leak via timing measurements.

4.1 :: Testing with dudect

To run dudect on our tiny SHA3 (and on its evil twin Alt-SHA3), it suffices to copy the file dudect.h into the code's directory, and to create a small program, for which we will start from the example dut_aes32.c provided in dudect's repository example/ directory. We'll call this copy dut_sha3.c.

In the latter file, we'll just modify the do_one_computation() function as follows:

uint8_t do_one_computation(uint8_t *data) {

uint8_t in[512] = {0};

uint8_t out[32] = {0};

uint8_t ret = 0;

memcpy(in, data, 512);

sha3(in, 512, out, 32);

ret ^= out[0];

/* return some computation output to try to tame a clever optimizing compiler */

return ret;

}This function runs SHA3 on a fixed-length, 512-byte input, whose value is controlled by dudect.

It then suffices to compile the code as

gcc -O3 sha3.c dut_sha3.c -lm -o test

Note that we add the -O3 optimization flag, to make the binary code closer to what would be used in practive. Generally, the code should be tested in similar conditions (toolchain, options, etc.) as the code that would be effectively used.

Launching ./test on the original, constant-time SHA3 will leave dudect running and reporting "maybe constant time" until you interrupt the search:

meas: 0.20 M, max t: +2.27, max tau: 5.08e-03, (5/tau)^2: 9.70e+05. For the moment, maybe constant time.

meas: 0.30 M, max t: +2.49, max tau: 4.55e-03, (5/tau)^2: 1.21e+06. For the moment, maybe constant time.

meas: 0.40 M, max t: +1.90, max tau: 3.02e-03, (5/tau)^2: 2.75e+06. For the moment, maybe constant time.

meas: 0.02 M, max t: +2.30, max tau: 1.60e-02, (5/tau)^2: 9.76e+04. For the moment, maybe constant time.

meas: 0.02 M, max t: +2.32, max tau: 1.48e-02, (5/tau)^2: 1.14e+05. For the moment, maybe constant time.

meas: 0.03 M, max t: +2.21, max tau: 1.38e-02, (5/tau)^2: 1.32e+05. For the moment, maybe constant time.

meas: 0.80 M, max t: +2.11, max tau: 2.36e-03, (5/tau)^2: 4.47e+06. For the moment, maybe constant time.However, with the non-constant-time Alt-SHA3, dudect instantaneously detects the leak:

meas: 0.09 M, max t: +10.69, max tau: 3.59e-02, (5/tau)^2: 1.94e+04. Probably not constant time.Note: ARM vs. x86

Important notes:

- For reliable results, you should disable the CPU's dynamic frequency scaling, which can be done on Linux with the following command:

echo "0" | sudo tee /sys/devices/system/cpu/cpufreq/boost- dudect uses the x86 assembly instruction

RDTSC, and thus will not work with ARM chips. The cycles count code should be modified accordingly.

4.2 :: Testing with TIMECOP

Assuming you have Valgrind installed, copy TIMECOP's poison.h into the tiny_sha3/ directory, and create the following program, in a file that we'll name sha3grind.c:

#include <stdlib.h>

#include <stdint.h>

#include <string.h>

#include "keccak.h"

#include "poison.h"

int main(){

int ret;

uint8_t in[512] = {0};

uint8_t out[32] = {0};

poison(&in, sizeof(uint8_t) * 512);

keccak(in, 512, out, 32);

}

Then compile as follows:

gcc -g -O3 sha3.c sha3grind.c -o test

Note that we add the -g debug flag provides Memcheck with symbols, to return more readable output.

Then, launch Valgrind/Memcheck as follows, where --track-origins=yes instructs Memcheck to report uninitialised data – which will report cases where secret data, labelled as uninitialised, affects the program's control flow:

valgrind --track-origins=yes ./test

With the clean, constant-time SHA3, we'll obtain the following results:

==12519== Memcheck, a memory error detector

==12519== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al.

==12519== Using Valgrind-3.15.0 and LibVEX; rerun with -h for copyright info

==12519== Command: ./test

==12519==

==12519==

==12519== HEAP SUMMARY:

==12519== in use at exit: 0 bytes in 0 blocks

==12519== total heap usage: 0 allocs, 0 frees, 0 bytes allocated

==12519==

==12519== All heap blocks were freed -- no leaks are possible

==12519==

==12519== For lists of detected and suppressed errors, rerun with: -s

==12519== ERROR SUMMARY: 0 errors from 0 contexts (suppressed: 0 from 0)However, with Alt-SHA3, Valgrind will report the following:

==12629== Conditional jump or move depends on uninitialised value(s)

==12629== at 0x109998: sha3_keccakf (sha3.c:84)

==12629== by 0x109BB7: sha3_update (sha3.c:132)

==12629== by 0x109BB7: sha3 (sha3.c:165)

==12629== by 0x10912C: main (sha3grind.c:13)

==12629== Uninitialised value was created by a client request

==12629== at 0x10910F: main (valgrind_keccak.c:13)

==12629==

==12629== Conditional jump or move depends on uninitialised value(s)

==12629== at 0x109998: sha3_keccakf (sha3.c:84)

==12629== by 0x109BE4: sha3_final (sha3.c:149)

==12629== by 0x109BE4: sha3 (sha3.c:166)

==12629== by 0x10912C: main (sha3grind.c:13)

==12629== Uninitialised value was created by a client request

==12629== at 0x10910F: main (sha3grind.c:13)

==12629==This clearly indicates that 1) the code is not constant-time, and 2) the location of the branching causing the leak.

5 :: Conclusion

We showed the principle of timing attacks and how to use our favorite tools. It may look like eliminating timing leaks is simple, but in practice it's not, due to:

- The sheer amount of code in modern software projects, be it the developer's own code or external dependencies.

- The different behavior on different platform, and the lack of documentation and knowledge about many systems.

- The fact that, in some cases, timing leaks are inevitable, either to avoid prohibitive performance overhead, or because of how the system is designed (think of cases:

if (success) then do .. else do ..).

As the cryptographic adage says, attacks always get better, rarely worse, so new types of timing attacks will certainly be discovered.